08.23.11

User Generated Content

How we got where we are today

Computers don’t know anything. They have to be programmed and designed extensively to make sense as tools for accomplishing even the most basic of tasks. If you wanted to draw a circle using an untrained computer, you would have to tell the computer what a “circle” is, how to draw a line, and even that there is such thing as a canvas on which to draw. Today this is not the case. Proficient tools for accomplishing most tasks already exist, so users don’t have to know how to program in order to get things done. Photoshop, for example, makes drawing a circle into a single drag-and-drop action so intuitive that users never have to consider the underlying procedures or equations.

A computer connected to the internet becomes not just a tool for drawing circles, but a platform for relationships and a magical container for all the forms of media that preceded it. Of the major websites on which artists have come to work and play: Facebook, Tumblr, YouTube, and Twitter, each does something a little different, but they are all free, mass-market, “Web 2.0” tools, intended to connect users to each other through content they contribute: status updates, pictures, videos, and profiles. Everyone uses these sites to keep up with friends and family and to express their identity, but for artists they also function as a casual, social, and surprisingly robust publishing and distribution system, and often the subject of the work itself. There used to be a saying about freedom of the press being guaranteed only to those who own one, but today’s tools are so far beyond print that an artist working with the internet has little use for something so antiquated as a printing press.

For the computer to become so widely used as a live, work and play space, it had to be modeled largely after the life which preceded it. On his Post Internet blog Gene McHugh writes, “[the] ‘Internet’ became not a thing in the world to escape into, but rather the world one sought escape from…sigh…It became the place where business was conducted, and bills were paid. It became the place where people tracked you down.” Likewise, everyday life has become “technologized” to the extent that offline interactions now occur in the language of technology. A good conversation puts us on “the same wavelength,” as if we’re radios. The word “like” is becoming primarily a verb used to describe the action of “liking” a Facebook page.

Facebook didn’t exist until 2004, YouTube 2005, Twitter 2006, and Tumblr 2007. Networked technology is now present everywhere in everything from our always-on cellphones to connected urban infrastructures to autonomous global stock markets. Our lives have become so integrated with networks in such short order that it is almost unimportant to distinguish between communication on and offline. While my grandpa grins from ear-to-ear each time we video chat and talks about how we’re living in the future, my little sister routinely “hangs out” with her friends online, leaving the video window open in the background as a kind of presence. The perpetual connectedness of rising generations has kicked technology’s status as spectacle into rapid decline.

John Durham Peters, who ponders “being on the same wavelength” in his book Speaking into the Air: A History of the Idea of Communication, writes that, “communication as a person-to-person activity became thinkable only in the shadow of mediated communication.” He writes in the past tense with a certain sense of finality, as if this shift happened at a certain point in time and never changed. But new tools are being introduced every day, and with each system update or new website we sign up for the kinds of mediation we engage with change. It’s not so much that technology has crept into everyday life but that there is a back-and-forth exchange of metaphor between online and off; a continuous push and pull between fashioning our tools and being shaped by them.

How our tools have changed

In 1985, Marvin Minsky published The Society of Mind, a book that attempts to explain the possibility of artificial intelligence by atomizing complex human activities. For example, “making something out of blocks” is broken down into a tree of yes or no actions called agents. Because his reductionist philosophy is admittedly not very technical, Minsky is able to describe how we interact with technology in a remarkably clear way:

“When you drive a car, you regard the steering wheel as an agency that you can use to change the car’s direction. You don’t care how it works. But when something goes wrong with the steering, and you want to understand what’s happening, it’s better to regard the steering wheel as just one agent in a larger agency: it turns a shaft that turns a gear to pull a rod that shifts the axle of a wheel. Of course, one doesn’t always want to take this microscopic view; if you kept all those details in mind while driving, you might crash because it took too long to figure out which way to turn the wheel. Knowing how is not the same as knowing why.”

Minsky’s distinction between having the ability to use a tool and understanding how it works is even more important today than it was in the 80’s because technology has become better, closer, and harder to distinguish, though it would appear that nothing has changed. Several years ago when my mom’s car stalled on the highway, the repairman told her that the car was physically in fine shape but that its internal computer—an intermediary between the pedals and engine that serves as a sort of control center—had failed. A specialized code repairman had to be called in to run a diagnostic and figure out what went wrong.

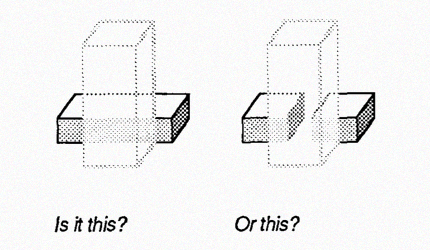

When Minsky wrote about cars, the underlying system was only hidden behind a facade of metal and plastic. While the workings of a car have always been complex, back then there were physically connected rods and gears between steering wheel and axel, which could be taken apart piece-by-piece and put back together in order to understand how the car works. Computers allow for an interface, like pedals and a steering wheel, to be decoupled from its mechanics. In a computerized car, changes to the steering wheel still result in a shifting of the axel but these actions are triggered by code, sensors, chips and wires (or even wireless signals) that obviate the need for decipherable physical connections. If we’re not granted access to the obfuscated code loaded onto our cars’ computer chips, we can no longer truly take our cars apart and put them back together again, and we have lost the potential to understand yet another one of the systems behind our everyday lives.

When we can’t deal with technology on its own terms: code, we rely on the metaphors presented by its interface to come up with stories about how it works. My mom didn’t even know her car had a computer in it until it broke down because it looked and worked just like every other vehicle she had owned. With a bit of cleverness, programmers and designers are able to make a computerized car appear no more complicated than a car from the 80’s though a computer is invisibly routing input from the steering wheel to the axels and optimizing for fuel efficiency. This is a major reason our attempts at understanding the tools we work with today more often result in amassing a series of built-up misunderstandings.

The car is an example of a relatable system that is secretly complex, but we also tend to project a sense of complexity onto simple systems. Paul Ford’s essay Time’s Inverted Index illustrates the way we filter a computer’s output, like a keyword search covering ten years of email, through our emotional brains:

“It is not the fault of the software, which is by definition unwitting. No one writing code said, “Let’s totally mess with his perception of self and understanding of free will.” The code sees an email that is filled with words as something like a small bucket that is filled with coins. It takes the coins out one by one and stacks them in squares marked out for each denomination, adding a slip of paper between each coin that indicates the coin’s bucket of origin. Then it does the same with the next bucket. There are many denominations of coins; a truly big table, called an inverted index, is needed to hold them all. The coin-sorting program cannot think or advise, but it does allow you to go to a pile of coins on the table, pick them up, and know their buckets of origin. It’s my unrigid brain that turns a sorted stack of coins into a story—so there I was, querying against my life, tapping the touchscreens of fate and clicking the mouse of destiny, all of it suffused with a sort of sweet nostalgia. (Of my emails, 893 mention libraries; 311 mention libraries and love.)”

Early experiments in Information Retrieval aside, before web search which was only put into practical use in the 90’s, there was really no such thing as search. Yet search has become a paradigm. A fundamental way we expect to access information. An inalienable right. I searched Google 46 times today alone. If we’re not looking critically at the way technology orders our experience, we are operating on juxtapositions of information which may be arbitrary, unimportant, or—as is the case with the computerized car—beyond understanding. On top of that we interpret these results as bearing emotion, utility and truth, the sweet nostalgia which Ford refers to.

Google is working hard to maintain the feeling of simplicity and effortlessness while simultaneously increasing the underlying complexity that makes results better. A promotional site boasts, “at any given time there are 50-200 different versions of our core algorithm out in the wild. Millions more when you realize your search results are personalized to you and you alone.” There is still only one search box, but there are as many different result sets as there are visitors to the site. While my mom misunderstood her car, she at least had a mental model of cars from before computers to believe in. Software with no real life counterpart can be tricky because all we have to go in terms of creating our stories is an abstract, utilitarian interface like a textbox, search button, and some basic ideas about servers sending bits to and fro. The same clever design that makes complicated software easy to understand can lead users to a kind of collective hysteria as they try to decipher hidden meanings in the way their friends are ordered on Facebook.

Abstract inventions like search boxes also find their way back into real life in curious ways. I find myself strangely frustrated in offline situations that lack Google’s utility, like a used bookstore with no searchable catalog. Google has not just changed expectations about the findability of information, it has shaped the way that we ask for it. We used to ask questions of a librarian or an expert but computers are not yet adept at processing “natural language,” so we meet them halfway by reducing our questions to queries composed of keywords and operators. When Google tweaked their interface to instantly show results before users even finish typing a query, half-typed words and phrases immediately started to show up in the referral logs of my website.

Life in the shadow of technology

As a cognizant user, Ford is careful not ascribe blame to his computer, but in their paper Bias in Computer Systems researchers Batya Friedman and Helen Nissenbaum examine the ways in which, “groups or individuals can be systematically and unfairly discriminated against in favor of others by computer systems.”

“Computer systems…are comparatively inexpensive to disseminate, and thus, once developed, a biased system has the potential for widespread impact. If the system becomes a standard in the field, the bias becomes pervasive. If the system is complex, and most are, biases can remain hidden in the code, difficult to pinpoint or explicate, and not necessarily disclosed to users or their clients. Furthermore, unlike in our dealings with biased individuals with whom a potential victim can negotiate, biased systems offer no equivalent means for appeal.”

The examples presented here are less concerned with the problem of users being intentionally manipulated than with the opportunity cost of docilely operating within dominant software patterns, even so Friedman and Nissenbaum’s unpacking of computer biases is eye-opening. Of the three types of bias the authors have identified, we have already encountered two: Preexisting Bias and Technical Bias. “Preexisting Bias has its roots in social institutions, practices, and attitudes.” The computer environment described by McHugh that all too closely mimics real world drudgery is an example of how computers imbued with real world metaphors inevitably carry unnecessary conventions from the physical into the digital realm. The computerized car which masks its complexity and impenetrability behind an interface indistinguishable from its pre-computer predecessors also exhibits this type of potential bias. “Technical bias arises from technical constraints or considerations.” Complex Google searches with results sorted using a cryptic algorithm as well as the stupidly simple keyword searches which Ford found himself interpreting as an accurate representation of events, are examples of the way that technical considerations can manipulate users intentionally or thoughtlessly.

While Friedman and Nissenbaum note that almost all instances of computer bias can be classified as either Preexisting or Technical, they identify a third type called Emergent Bias, which has great ramifications for artists working with Web 2.0 tools. Emergent bias arises “after a design is completed, as a result of a change in the context or usage of a system.” The hacking and retrofitting of existing software in the service of creative expression or critique is often celebrated as clever and resourceful, but what assumptions of that software are still being carried by the work? Any time the ways a user wants to work with a system butt up against its constraints, and the work they are doing is disadvantaged as a result, it can be classified as a case of Emergent Bias called “user mismatch.” Unlike victims of bias, however, artists seem to seek out these problematic areas.

The meta-ness of an artist like Ann Hirsch whose Scandalishious project satirizes a typical relationship with social media using YouTube itself seems to be stretching what is considered a “typical use” of the site. Typical use is hard to pin down because a site dedicated to user generated content can foster a culture of typical users as well as a group that is critical of them. Hirsch’s work and others like it may actually be strongest at the points where it becomes unclear if the artist is omnisciently addressing big problems and questions or enjoying being a part of the scene. On such general platforms neither group seems to be at a distinct disadvantage, especially because most users would agree that the companies behind Web 2.0 tools do not appear to be acting maliciously against them in providing these free web services.

Artists attempting to be critical of our relationship to social networking sites should follow the money back to advertising, where they will find irony in embedding themselves within the systems they seek to explicate. In You Are Not a Gadget, Jaron Lanier writes:

“The customers of social networks are not the members of those networks…the whole idea of fake friendship, is just bait laid by the lords of the clouds to lure hypothetical advertisers…who could someday show up…If the revenue never appears, then a weird imposition of a database-as-reality ideology will have colored generations of teen peer group and romantic experiences for no business or other purpose.”

It’s worth considering on a broad level that the very idea of user-generated content is an assumption perpetuated by Web 2.0 tools which has simply come to dominate internet art. Tumblr, the only one of the four major tools mentioned that does not heavily plaster content pages with display advertising, appears to be taking a financial loss and burning through venture capital money to grow their userbase and integrate their product more deeply into users’ lives. Starting a conversation about how computers shape our lives is important, but responses that don’t functionally uproot those systems will have bizarrely situated a generation of artists as a product whose criticality is being sold back to advertisers.

In defense of building tools

It doesn’t matter how critical what you’re saying is, the companies who thrive on user generated content would like very much for you to continue generating it at length. Content is an icky word for a reason, it doesn’t make any distinction between what is being said or to whom; it is something measured in click-throughs and conversion rates rather than poignancy or intelligence. In Marshall McLuhan’s famous words, “the medium is the message.” Power lies with the makers of mediums, or to use a term less slippery within an art context, tools and platforms. Though it has been described throughout this essay how design patterns, behaviors, and world views can swiftly circulate between the real and the virtual, it is rare to see users—artists especially—being the ones to create tools directly.

Creating new systems does not disallow hacking on top of existing ones, it just means knowing the full extent of the existing system’s biases and very intentionally keeping or discarding them. There can be no compromise. Even a tiny adjustment to the way an existing system introduces bias might necessitate throwing out everything on which it is built and starting from scratch. It could be said that tasking artists with building alternative systems for digital existence is too practical. In addition to the emotional intelligence it takes to dissect the ways computers warp our relationships with ourselves and each other, creating tools that address this requires experience with code. However, organizations like Creativetime have been pushing hard at the idea of “useful art” that seem to be nothing more than a thinly veiled draft of artists into the humanitarian workforce. In a strange way, experiments with technology seem to go beyond protestors, politicians, and non-profit organizations in their potential to create change. “[Technologists] tinker with your philosophy by direct manipulation of your cognitive experience, not indirectly, through argument. It takes only a tiny group of engineers to create technology that can shape the entire future of human experience with incredible speed,” writes Jaron Lanier.

In a blog post titled In Defense of Building Tools, Derek Willis, a developer for The New York Times, writes:

“A good tool doesn’t just make it easier for a reporter to create a story. It actually seeds the story, or makes it possible for more people in a newsroom to collaborate. When you have data but no tool, you become a gatekeeper of a sorts – which is appropriate in many circumstances, but not all. I can’t possibly know what my colleagues are thinking about, considering or being alerted to, but I can make it easier for them to test out theories and do some exploration on their own. Some of them prefer to do their own work, and we certainly miss some opportunities for apps that way. But others consult with me quite a bit, since they now have a much better idea of what we have and what we might be able to do with it.”

These examples are tailored towards journalism but the logic applies soundly to artists working with the internet too. In the words of Clay Shirky newspapers are seeing, “old stuff get broken faster than the new stuff is put in its place.” Maybe because of this, journalism has been one of the more progressive fields in terms of adopting custom-made tools and interfaces. In another example from the New York Times, comment threads for highly discussed articles have been elegantly improved by integrating hard data from users that allows thousands of responses to be mapped onto a zeitgeist visualizing matrix. The authors of a book called Newsgames even pose that journalism has a lot to learn from videogames! As a rich experience that cannot exist on any previous medium alone, the authors propose that so called “newsgames” could teach people the dynamics of complicated systems through simulated first-hand experience, rather than by inference from an account of events or by reading dry explanations. Newspapers and other “old media” employ a paid content publishing model, as opposed to relying on the explosion of free user generated content. While the authors of Newsgames write that these would be supplements rather than replacements for news, it seems to be a vision that looks beyond content and towards systems.

Knowing how even the mundane aspects of computing like files and folders can filter back into the way we think and behave, what if we redesigned these aspects of computing to incorporate more inventive modes of working? Planetary is an iPad app by a company called Bloom which visualizes a user’s iTunes library in the form of a galaxy rather than a spreadsheet. While at first I was put off by how superfluous this seems, the designers believe “these Instruments aren’t merely games or graphics. They’re new ways of seeing what’s important.” What if instead of files and folders, we visualized what we are working on like a solar system? Turns of phrase could be set into orbit at different velocities and a solar forecast of our filesystem would determine how things get pulled together. I often find myself navigating a crowded array of overlapping windows, which seems symptomatic of multitasking or ADD, so how would a system that takes this mindset as a starting point ideally work? These bespoke systems don’t have to save the world. What did files and folders ever do for you? Lanier seem to hint that popular interfaces become pervasive more by conspiracy or agressive advertising than on merit anyway. Simply introducing different kinds of arbitrariness may lead to accidental discoveries about the way we work and live.

Wired Magazine has called Xanadu, a system proposed by Ted Nelson (inventor of the word “hypertext”) both, “the most radical computer dream of the hacker era,” and, “the longest-running vaporware project in the history of computing.” Xanadu assumes an entirely different relationship with “content” than we are used to. The web as we know it is made up of documents which link between each other. Xanadu’s key difference is it’s rigid structure, in which all links must be bi-directional. For example, if you wanted to take a piece of one document and put it into another, instead of “copy and pasting” you would do something called “transcluding.” While on a functional level copy and pasting takes a series of characters in one document and replicates them in another, transclusion less redundantly embeds a linked version of the original document into the new one. Clever design might make the interaction of transcluding no more complicated than a copy and paste, but transclusions are more meaningful because they can be followed like links from use to use. If the author of the transcluded fragment had derived it from somewhere else, a user could continue to follow the phrase through all of its incarnations. Xanadu would create radically different possibilities and limitations in both digital and physical space. In fact, Nelson envisioned that Xanadu’s linked attribution could be tied into a micropayment system that would end copyright disputes and provide content creators with steady income unlike the theories of “free” which have popped up to explain our present situation. A collage of images made with source material from ten different people would be traceable back to each of them, and payments as small as a few cents could trickle back to the image owners for every single use. In the bi-directionally linked world of Xanadu, the metaphor of files and folders become unimportant too. Ted Nelson evocatively describes flying through documents in 3D gaming space. Imagine how de-emphasizing carried over hierarchical metaphors like files and folders might affect the way we work in real life, as we chew through hyper-textual trains of thought. I’m doubtful that such a rigid system could ever be of use to a large group of people, but the prospect of such a bold experiment with more opinionated software excites me.

The way things are now is more like a pile up of metaphors and recycled code than laws of interaction which are set in stone. As can be seen in the examples above, designing computer systems is a strangely direct way of altering how people experience the world and relate to each other. Perhaps in the coming years artists will be able to create new platforms with the conceptual backbone that is lacking in today’s popular offerings. Artists who are already thinking critically about the way networked technology orders our experience might try experimenting with becoming makers of mediums, if only so that whatever comes after Web 2.0 offers more to artists than free hosting of its own critique.

Comment

You might be interested:

http://networkcultures.org/wpmu/weblog/2011/07/15/new-inc-research-network-unlike-us-understanding-social-media-monopolies-and-their-alternatives/

On the project mailing list now, thanks!